Artificial emotional intelligence

DecodingsIn contrast to the technocratic paradigm, artificial intelligence (AI) is getting more emotional, seeking to be relational. A new, intuition-based cognitive mode has arrived.

2018 marked a turning point, marked by the acceptance of AI in all sectors including industry, finance and the media. The cover article for the December 2018 edition of the monthly magazine Wired, entitled “Less Artificial, More Intelligence”, sported a bold subheading: “AI is already changing everything” and offered its analysis of new developments. After mastering logic, shape recognition, automatization and predictive marketing, AI is going for emotions.

While AI can be used for illicit political manipulations – we all remember the case of Cambridge Analytica– intuitive perspectives also offer new avenues to explore and a way to help consumers reconnect with objects and others.

Today, the emotional AI market is worth 20 billion dollars of the 39 billion that, according to the IDC market intelligence firm, the AI market is worth. In other words, it is time for brands to rethink how they want to win over and please customers.

Improving the fluidity of interactions

According to Adam Cheyer at the SxSW in 2018, the two questions most often put to Siri are “Will you marry me?” and “Do you love me?” Is this a manifestation of the current urge to connect emotionally with the machine? Maybe so. Still, if the FAFAM have a head start in the area of cognitive sciences, their aim has mainly been to “optimize and improve the customer experience”, says Luis Capelo,staff data scientist at Glossier and formerhead of Forbes Media’s Data Products team.

Whereas Gartner forecasts that conversational agents will account for 50% of analytical queries by 2020, Alexa (Amazon), Google Home (Google), Cortana (Microsoft) and their sisters primarily hold out the promise of interaction that is simpler, more intimate and more authentic than via a screen. That’s why Facebook has jumped on the bandwagon. Its home video chat device Portal came onstream at year-end 2018,, enabling users to connect with Amazon’s Alexa voice assistant via Facebook Messenger (the Portal account must first be linked to an Amazon Alexa account).

The trend is major. In a recent interview with Artificial Solutions CEO Lawrence Flynn published on”The Motley Fool”, interviewer Simon Ericksoncommented that “we’ve seen some research that says that 85% of people won’t actually be talking to a human being in managing their relationship with a company, and that 80% of larger companies are planning to implement chatbots, or digital assistants, by 2020.”

“Braintech” and emotional analysis

In parallel with these advances and given that, according to experts at Big Data Paris, the emotional part of our brain triggers our desire to purchase in 90% of all cases, the analysis of emotional behaviors – the study of emotions and responses to stimuli (odors, sounds, words, textures and colors) – is emerging as a new core business area.

Today, specialized algorithms are used to obtain data about individuals, such as Facebook’s FastText (text), the Allo-media vocal cookie that performs semantic analysis of phone conversations, and facial coding algorithms that open up even broader perspectives. The latter have drawn considerable attention in light of the surveillance rationales introduced in China but, inspired by the work done in the 1960s by psychologist Paul Ekman, they are about to revolutionize how mass emotion data are processed.

Presented at the CES 2018, Real Eyes, Beyond Verbal and Affectiva show a “feeling data” rationale and are positioned in emotion-based biofeedback technology. Lexalytics and TheySay already offer advanced capabilities for social media/sentiment analysis using machine learning and neuro-linguistic programming (NLP) algorithms.

Sensory AI

In parallel and regarding external data, the fragrance and food industries may be in for a shake-up. The French company Alpha MOSmarkets electronic noses and tongues whereas the U.S. corporation Aromyx produces sensor systems that “reveal the same biochemical signals that the nose and tongue send to the brain in response to a flavor or fragrance”.

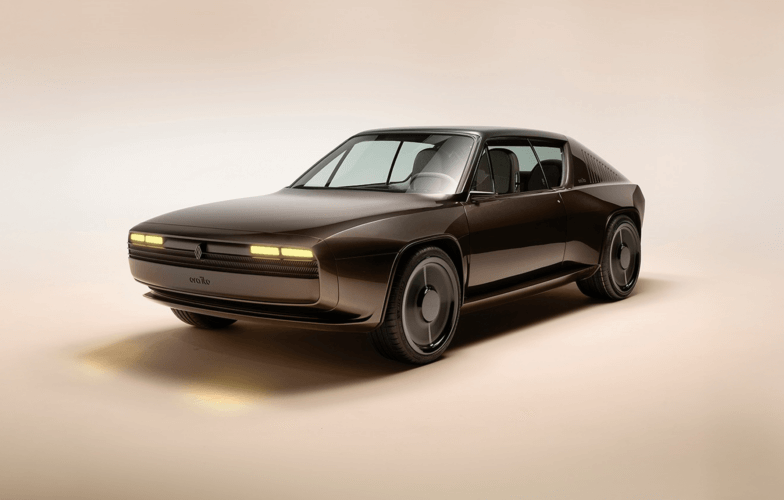

For Anna Tcherkassof, a member of the International Society for Research on Emotion and a specialist in understanding man-machine interactions, while these analyses are imperfect and not yet able to detect cultural variations – e.g. she notes that, in some countries, the word for “sadness” does not exist – this personalization is also going to bring a new approach to safety. For example, Eyeris offers “in-vehicle scene understanding AI for autonomous and highly automated vehicles” withmultimodal sentiment analysis to monitor the driver’s emotions.

“Yes” to intelligence, “no” to indifference

But the crux of the matter remains humans’ intuitive connection with AI.

How should it be developed and based on what criteria?

The issue of man-machine relations is a popular theme in science fiction, as evidenced by the Real Humans series in Europe, the series Almost Human and the film Her(2013) in the United States or the play “Three Sisters, Android Version” in Japan, directed by Ozira Hirata. The same idea emerges ineach case, i.e. the machine can be emotionally combinatorial in the emotional sense of the word.

The year 2017 was a milestone in this respect. The Japanese company Gatebox introduced a new version of the female alter ego Azuma Hikari, the first virtual “girlfriend” targeting singles, especially single men. In a different area, we might mention the computer-generated models Miquela Sousa (1.5 million Instagram followers) Shudu and the virtual influencer Noonoouri who posed recently with Kendal Jennerat a New York Fashion Week launch event.